How do I start an AI project?

Not sure where to begin with AI and machine learning (ML) in ocean science? This decision tree is designed to guide you toward AI/ML information and resources that best fit your project needs.

Work your way through the tree by clicking the blue dots in each node. This will expand the tree and help you consider best AI/ML approaches to use with your data. Clicking the text in each node will take you to new pages that provide additional content.

Worked Examples

These case studies are designed to provide an overview of a complete ocean science AI/ML project, from data processing to real-world application. Feel free to adapt worked example notebooks to your projects.

- Coral Spawning

- Benthic Imagery

- Boat Noise Detection

- Deep Sea Organism ID

This project utilized artificial intelligence (AI) algorithms to develop an automated coral spawning alert system for the Florida Aquarium. Facing the challenge of labor-intensive and uncertain manual monitoring of coral spawning events, particularly in light of the Stony Coral Tissue Loss Disease (SCTLD) affecting coral species in the Caribbean, the project aimed to reduce staff time and improve monitoring efficiency.

By implementing AI technology, specifically an anomaly detection algorithm, the system can analyze real-time underwater video feeds from aquaria and identify instances of coral spawning. This automation enables timely alerts to users, streamlining the monitoring process and providing critical information for coral restoration efforts amidst the challenges posed by SCTLD.

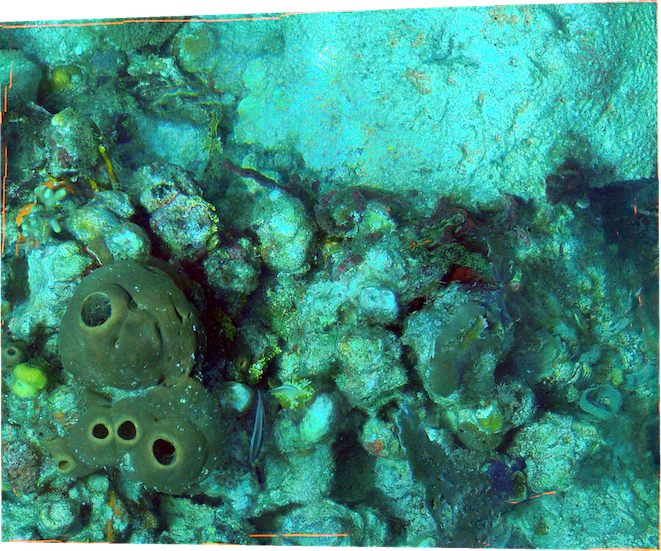

This project developed workflows and metadata standards for AI applications tailored to extract species abundance and percent cover information from benthic photo-quadrat imagery using the CoralNet environment. CoralNet is an online, open-source machine learning classifier that enables the identification of benthic species and their relative abundance using an unsupervised species annotation algorithm and a predefined set of labels of species and bottom types as training data.

This use case focused on 1) defining data and metadata requirements and standards for CoralNet applications using benthic imagery; 2) optimizing species identification and cover with imagery stored in CoralNet for training; and 3)

developing workflows and tools for subsetting and formatting photo-mosaic imagery for classification and percent cover analysis in CoralNet.

This project developed two types of workflows to prepare benthic imagery for use in CoralNet. The first workflow is called QuadrantRecon, which uses AI to identify quadrats in benthic photos and automatically crop the images to the dimensions of the quadrat. The second workflow is the Orthomosaic Tiler, which subsets large orthomosaic images that can be processed in CoralNet. The tiler is a script that can automatically subset images based on a shapefile, by pixel count, or by field dimensions for georeferenced orthomosaics.

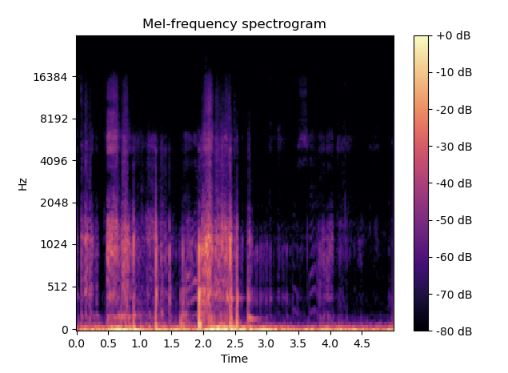

This project uses active learning to quickly adapt an existing bioacoustic recognition model to a new question: can we identify boat noise from underwater audio recordings. This project is adapted from the CCAI Tutorial: Modeling for Bioacoustic Monitoring created by Jenny Hamer, Google DeepMind, Rob Laber, Google Cloud, and Tom Denton, Google Research and using the Perch research project and model. Note: the current “Try Out a Notebook” links to this tutorial and will be replaced by the boat noise workbook in the near future.

Specifically, this notebook is used to build a model to identify general boat noise from underwater recordings. Scientists have been using hydrophones to record underwater sounds for decades, listening for noises like whale songs, grouper grunts, and shrimp snaps. Recently, there has been increased interest in using the noise from boats to get a general idea of the use of areas by anglers and boaters. Audio is especially useful to monitor large areas and areas far offshore where above-water cameras would be unable to get wide enough coverage. Additionally, audio files are much smaller than images or video which is important as the amount of data collected and stored is growing at a massive rate.

Instead of creating a new acoustic machine learning model from scratch, we are starting with the SurfPerch model. SurfPerch was trained on a combination of underwater reef noise, bird sounds from the Perch model, and general audio, giving us a robust model starting point. We then use transfer learning to build a specific classifier on top of the existing model. Here, we’re building a basic “Boat/No Boat” classifier using the SurfPerch model as a base.

The active learning component of this notebook is a way to quickly build up training data without having to listen to and classify hours and hours of audio. Active learning also keeps a human in the training loop. Simply put, unlabeled data is classified using the base model and the audio segments that the model has the most difficulty classifying are highlighted for manual labeling by the user. In this example with a Boat/No Boat classification, the audio segments that were classified as 50% confidence ‘Boat’ and 50% ‘No Boat’ are the starting points for the loop. By focusing training data on the audio that is most difficult for the existing model, the model is trained much faster and efficiently.

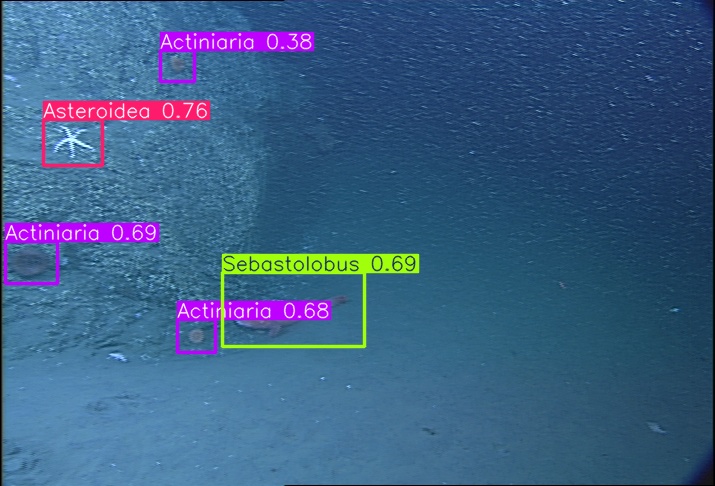

FathomNet is a large-scale open-source project working to improve the identification and classification of underwater organisms and objects from images. FathomNet is made up of four software platforms and solutions that aim to improve the accessibility and usability of ocean data and underwater AI. These platforms include 1) FathomNet Database, which collects and organizes labeled and unlabeled underwater images, 2) FathomNet Models, which are pre-trained models trained on the database, 3) FathomNet Portal, a web-based tool that serves an interface for using pre-trained models, and 4) FathomVerse, a app-based game where citizen scientists help label underwater images to build up the library of images in the database.

FathomNet’s notebook allows users to evaluate the pre-trained models and provides a workflow for users to train their own models with default test images or on their own images or videos. Available models include organism identification, generic object detection, and trash detection.

Community Resources

You can find additional AI resources in this directory continuously updated by the SECOORA community.

If you would like to contribute, please send an email to the following address to request access: ai@secoora.org

| Project | Description | Source |

|---|---|---|

| Tutorials | ||

| ML on Landsat data | Access, resample, and rescale satellite data, and apply ML spectral clustering models to examine patterns over time. | Roumis and Huang; Project Pythia |

Google Vertex AI | Broad tutorial for training and deploying ML models and AI applications across imagery, text, video, and tabular data. | Google AI |

| Computer Vision Across Marine Science | Detailed notebook with an emphasis on marine object detection and classification. | Ada Carter and Katie Bigham; University of Washington course OCEAN 462-C |

| Labeled Datasets | ||

| List of LILA datasets | Extensive list of labeled datasets relevant to conservation. | Maintained by Dan Morris (Google AI). LILA BC was created by a working group that includes representatives from Ecologize, Zooniverse, the Evolving AI Lab, and Snapshot Safari. |

| Additional datasets referenced by, but not hosted by LILA | Additional list of labeled datasets relevant to conservation | Maintained by Dan Morris (Google AI). |

| Source Cooperative | Data publishing utility that allows trusted organizations and individuals to share data using standard HTTP; Several geospatial applications. | Radiant Earth |

| Microsoft AI for Good Lab | ||

| Google Cloud Public Datasets | ||

| Fathomnet | Open-Source marine image repository with labeled and unlabeled data. | Monterey Bay Aquarium Research Institute (MBARI) |

| Imagery Resources | ||

| PaliGemma multimodal model | Vision language model (VLM) that accepts images or videos with text strings; for example “how many dogs are in this image?” | VLM by Google AI; Tutorial post by Roboflow |

| MegaDetector model | YOLOv5 model that detects animals, people, and vehicles in camera trap images. It does not identify animals to the species level, it just finds them. | Dan Morris (Google AI) |

| ML using benthic imagery | Resources for subsetting and formatting photo-mosaic imagery for classification and percent cover analysis in CoralNet. Subsetted tiles of 1x1m from photo-mosaics can be uploaded to the FK_photo-mosaic resource on CoralNet for processing. | Montes; University of Miami |

| Remote Sensing applications | Extensive list of papers, training datasets, benchmarks, and pre-trained weights for Remote Sensing models. | Skysense: A multi-modal remote sensing foundation model towards universal interpretation for earth observation imagery; Guo, Xin and Lao, Jiangwei and Dang, Bo and Zhang, Yingying and Yu, Lei and Ru, Lixiang and Zhong, Liheng and Huang, Ziyuan and Wu, Kang and Hu, Dingxiang and others; Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 27672-27683, 2024 |

| Acoustic Resources | ||

| Fish sound detector | Acoustic data, a Python library to work with Raven annotation files and generate spectrograms, and a model to detect the vocalization of fish sounds from spectrograms generated from hydrophones. | A collaboration between Mote Marine Laboratory & Aquarium, Southeast Coastal Ocean Observing Regional Association, and Axiom Data Science. |